If you’re running an online business, it’s one of the worst things that can happen: your site could fail or your app could break. And you’ve probably heard of the famous Silicon Valley mantra “Fail Fast, Fail Often”, but that’s definitely not what you want your servers to do. How do you avoid this?

If you’re running an online business, it’s one of the worst things that can happen: your site could fail or your app could break. And you’ve probably heard of the famous Silicon Valley mantra “Fail Fast, Fail Often”, but that’s definitely not what you want your servers to do. How do you avoid this?

Murphy’s Law

The thing is, you can’t avoid it. As Murphy’s Law states, “anything that can go wrong, will go wrong”. Online systems are highly complex infrastructures built out of many different hardware and software components. Even the simplest of WordPress installs already comes with a webserver, a database, and then the CMS itself, all of which will, eventually, at some point, break. And a complex modern media or ecommerce site usually has many more services in the mix than that, which means you’re basically building a house of cards. And in all likelihood, you’ll then get various hackers blowing at the cards, too.

If you’re a developer or sysadmin reading this, you will probably, at this stage, think “well obviously this guy doesn’t know how to build a Robust System.” (More likely, though, you’re really hoping nothing will break, so you can finally go home to your family, play with your kids, and maybe get some sleep.) Or, if you’re a manager, you may think “well that’s intriguing, because that’s not what my developers and sysadmins are telling me”. (It’s probably because they’re hoping they can finally go home to their families, play with their kids, and maybe get some sleep.)

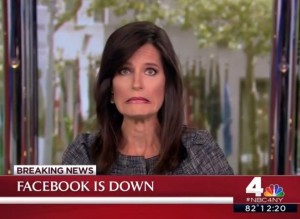

So how much of a fact is failure? Well, just ask tech giants like Google, Facebook, or Netflix. With their resources, brain trust, and money, you’d think their systems would be infallible. Instead, their outages are just a lot more publicised, causing anything from derision to mild panic. They still happen.

So failure is not something to ignore, it’s something to embrace. How can you possibly avoid it, and how do you minimise the negative impact? I’m not going to go into technical specifics, but there are some broad principles I’ve found useful over the years. This is not a manual on high-availability — just some things to keep in mind.

Single Points of Failure

A chain is only as strong as its weakest link. Which is probably why, if you want to secure something important, you’d want to use two chains or more, not just one. But follow those chains, because often, you may think you’ve added extra redundancy — only to find out that there’s one point where multiple chains link to a single hook. The more servers there are in a content management or e-commerce system, the more likely you are to find that in reality, everything is moving through several funnels. Those are also the points where things break catastrophically (or if they keep running, under stress, they may just choke the flow). You’ll want to find these before one of your stories goes viral or the shopping season starts. If you haven’t found them, you haven’t looked hard enough.

Adding Stuff

Whenever you mention fear of failure to a developer or sysadmin, especially for that single point of failure you’ve just found, the proposed solution is probably going to be redundancy, clustering, backups, (hot) standbys, and so on. This is what I refer to, in highly technical terminology, as “Adding Stuff”. I’m not saying this is always bad, because to a large extent, it’s just part of the basics of any solid infrastructure. But everything that’s added also brings more complexitiy and new risks. I once witnessed a server with double power supplies go up in flames. The second supply was supposed to be the fail-safe, but in practice, it generated too much heat for the room it was in, and the result was spectacular (though disastrous). A clustered cloud probably won’t burn out quite so literally, but balance the considerations: is it worth it? Keep it as simple as you possibly can. Infrastructures are never “too big to fail”.

The Mythical SLA

When things go wrong, whatever the salesperson promised you in the contract won’t matter much. “Our site is down, but fortunately, the SLA with our provider promises 99.8% availability so it’s going to be fine”, said no sysadmin ever. That’s not to say the agreements don’t matter, but they’re not a magical formula against all ailments: you won’t cast away gremlins with Five Nines. And you may have carefully read the fine print, but did you fully realise the consequences? I recently got a proposal for “99%, measured across the year”, which doesn’t sound unreasonable, until you realise that if the 1% is contiguous, it might mean 3 days of downtime. And if you’ve built your house of cards on multiple, chained services with various uptime guarantees, the actual risk to the infrastructure as a whole stacks up: the combined risk percentages make for a fun high school math exercise.

No matter what compensation an SLA promises, it probably won’t cover the damage. It’s much more useful to have a reliable provider or partner than some theoretical uptime SLA. Of course, that’s much harder to assess than just a simple number, but it really pays to check references, and build a relationship. Because that’s what will get things to work again when things inevitably fail. You can let the accountants work out how many credits you will get in return for how many minutes of outage later, when things are working again.

Degrading Gracefully

Getting back to that house of cards again; wouldn’t it be great if you could just take one or a few out, and it would still be standing? Maybe a more useful game to play would be Jenga. Have a very careful look at your stack of blocks and consider what would happen if you take them out one by one.

A website doesn’t necessarily have to go out because the search doesn’t work. An app doesn’t have to stop working because the images aren’t available. Many interactive features (commenting, rating, and so on), are essentially expendable, if need be. Avoid dependencies wherever possible: why create more single points of failure? There will always be some you can’t avoid, even if you know where they are. But no Jenga tower ever got more stable by adding more blocks. Fortunately, online systems aren’t entirely like Jenga: there’s no rule that says you can’t quickly replace a block that was just taken out. Prepare for that.

Embracing Failure

I mentioned earlier that you should embrace failure. So, you’ve had a critical look at what you’re running; you know where things could go wrong, there are redundancies where it makes sense, you haven’t overcomplicated the setup, a partial outage won’t bring the house down, and you’re realistic about how much Service you can expect out of your Level Agreement. And then, the system is down.

Let me just illustrate this with an anecdote. Back in the nineties, I was sysadmin for a shipping company. Working the saturday shift in an empty office, I noticed a rapidly growing queue of trucks outside. (In fact, traffic quickly backed up all the way to the highway, were it ended up with several kilometers of stand-still traffic, but thankfully, I couldn’t see that from my window.) The database was locked, the network was overloaded, and nothing was moving. As I was trying to diagnose the problem, one of the floor managers barged into the office and barked “this is costing us €100,000 an hour! it needs to be fixed NOW!” This was a part-time job, paying for my tuition, and I think I made about €7 an hour. I didn’t need the job (or at least, not specifically that job), and I didn’t need somebody yelling at me; and I must have looked fairly €7-an-hour unimpressed with the number that was just thrown at me. At any rate, he suddenly realised he was keeping me from actually solving the problem, and instead offered to get me some coffee, and then started answering the continuously ringing phones (with other angry people demanding an immediate solution).

It took about half an hour to get the system back online. It took us another few days to figure out what alignment of stars had caused the issue to come up, and several weeks to change the code so it wouldn’t happen again. And crucially, nobody was blamed for it, because it was something nobody could possibly have foreseen; and because nobody was trying to shift the blame, we got to find the problem and solve it (instead of wasting more energy on pointing fingers).

Which taught me that what defines an online business is not how well you avoid systems failure. You can reduce the downtime, but you can never eliminate it. What really defines an online business is how well you deal with the systems failure.

Or, for the management abstract: when things go wrong, don’t be surprised, because that would be pretty naive. And when it happens; either you’re fixing things, or you’re getting the coffee.