First, let’s be clear: I really like Hadoop, and not just because it’s named after a yellow toy elephant. But over the past few years, “Hadoop” has also become an almost mystical term, happily sprinkled throughout marketing brochures. So, to be fair, it’s not Hadoop that is the problem — the problem is about Hadoop and how it is perceived. And I’m not going to go into great technical details, but there are a few problems with that perception.

First, let’s be clear: I really like Hadoop, and not just because it’s named after a yellow toy elephant. But over the past few years, “Hadoop” has also become an almost mystical term, happily sprinkled throughout marketing brochures. So, to be fair, it’s not Hadoop that is the problem — the problem is about Hadoop and how it is perceived. And I’m not going to go into great technical details, but there are a few problems with that perception.

Hadoop is a Hype

A few years back, Hadoop would inevitably be described as “open source Google”. Google famously used MapReduce, and a distributed file system, to create their huge search index. And the same technology was now available to anybody, as open source, with Hadoop. This narrative now fits right in with simultaneously converging hypes around “Market Technology”, “Big Data”, and “Cloud”. So much so, that it’s all too easy to combine the buzzwords in one sentence. “Marketing technology that uses Hadoop to analyse big data in the cloud”. That probably sounds uncannily familiar, right? And there are plenty of companies that would like to sell you on that, as either a system (better still, a “suite”), or as services around it.

But that blurb of buzzwords doesn’t actually mean anything. “Marketing technology” is a problem, because most marketeers don’t know all that much about technology. “Big Data” is more or less defined as “more data than your company was used to processing before”, which is rather relative. And “Cloud” is vacuous vapour. The term is used for anything from hosting virtual servers, to “something as a service” (“XaaS”). And you may remember the rather hilarious “cloud in a box” (also known as… “a server”). In short, a cloud is a visible mass in the sky, that appears to have substance, but consists of vapour.

In that context, Hadoop is actually quite tangible. At least it’s an Apache Project you can actually download. It doesn’t really deserve to be dismissed as a buzzword. But that’s exactly what happens if you read the simplified “open source Google” descriptions, which focus on MapReduce. Or when MapReduce is described as the only possible way to deal with Big Data.

If you don’t really know what MapReduce is, you’re not alone. I like IBM’s simple explanation of what MapReduce, in essence, actually is:

As an analogy, you can think of map and reduce tasks as the way a census was conducted in Roman times, where the census bureau would dispatch its people to each city in the empire. Each census taker in each city would be tasked to count the number of people in that city and then return their results to the capital city. There, the results from each city would be reduced to a single count (sum of all cities) to determine the overall population of the empire. This mapping of people to cities, in parallel, and then combining the results (reducing) is much more efficient than sending a single person to count every person in the empire in a serial fashion.

As a method, it’s not really Google’s invention (though I wouldn’t go so far as to credit Roman emperors with the idea). You can see a nice 1964 clip of data being mapped and reduced here; IBM has always been pretty good at selling this kind of business intelligence:

At any rate; great, so now you know what MapReduce actually does, what are you going to Map and Reduce?

Obviously, MapReduce can be incredibly useful. But it’s also a very limiting paradigm. There are a lot of problems that are quite hard to structure so they can be mapped and then reduced. There are a few killer applications for it. It’s not accidental that Google used it, and that it was then used for the Nutch crawler for Lucene: if you’re following every link on every page and storing the results, it makes sense to field these tasks out to many servers, mapping and reducing. But in many cases, you don’t want to force MapReduce into the equation. Google confessed to more or less having stopped using MapReduce as far back as 2010. And even Apache Mahout, the project named for the elephant driver steering Hadoop, has officially said goodbye to MapReduce in April this year, moving “onto modern data processing systems that offer a richer programming model and more efficient execution than Hadoop MapReduce”.

I could write similar paragraphs about HFS (Hadoop’s distributed file system). But again, that’s short-selling Hadoop. It’s not so much one project or technology, it’s a whole set of tools (together with HBase, Pig, Hive and so on), and it’s closely related to several other Apache projects (it started out as part of the Lucene project). It’s definitely not just MapReduce or HFS, but that’s apparently were a lot of the hype in the glossy management magazines is still at.

And that’s the problem with hype: it stops people from sensibly considering alternatives, and then picking the right tools for the job. You can’t do that before you understand exactly what your problem is. And you won’t get anywhere by blindly picking Hadoop before you ever get started.

Hadoop is just a Tool

If you’re reading this because you’re looking for “marketing technology”, the paragraphs above are probably already too technical. But that’s my second point: it’s just a technical tool.

Even if you’d like to think you’re building a revolutionary, disruptive product (or app, or system, or service, or shop). Does that mean you really need to build all of the tools yourself? If you’re going to re-invent the car (like, for instance, Tesla did); does that mean you also want to re-invent the wheel? Tesla has a very different take on many aspects of their cars, but they still use wheels.

The past few months, I’ve seen a lot of “build vs. buy” debate around Marketing Technology. But make no mistake — that debate is about “buying one marketing cloud”, the integrated suites that the likes of Adobe would like to sell you on, which supposedly work “turn-key”, with little or no effort. Or, tying together — “building” — various “best of breed” systems. In that sense, I would immediately say I’m very much on the “build” side of things. But that’s because implementing integrations of existing systems is a very doable kind of building. Either way, a vendor might try to impress you by saying they use Hadoop; but the point is, you’re paying them so you don’t have to care too much how they do it.

Of course, in some cases, you might be able to create a major competitive advantage by building at least some of the parts in a completely custom way. A bit like how Tesla is opening their own battery factory, or Apple is manufacturing their own sapphire for Apple Watch screens. But even if you are going to build things from scratch, the modern reality is that there are very diverse means to an end. The process for this is whittling down the actual business case, allowing you to get to families of tools to use. Then to specific tools, and looking at how they would fit together. Then, rethinking; and then narrowing it down again. It’s not something you begin with saying “let’s use Hadoop for this” — that’s just one possible outcome.

Hadoop Clusters are Expensive

Suppose you do have a business case for a very custom solution; and you’re about to hire your own data scientists. Don’t forget to have everything in place to actually let them do their job. They may have the skills you’d expect (standard expectations would be Python, R, and of course, a healthy understanding of statistical analysis). Yet if you don’t watch out, they’ll spend a lot of time with “data janitorial work” (as the NYT recently called it, somewhat simplistically). Systems need to be set up, kept running, administered. They need to be loaded with data that needs to be extracted, exported, imported, cleaned, and normalised. A minimal team wouldn’t just have data scientists; you’d really have to add at least developers and sysadmins. A specialised team like that is hard to hire, and expensive to keep.

Then, there’s also the expense of running the analysis. Hadoop was designed to run on “commodity hardware”. But while the cost of one node may be low, the whole point is to have a large cluster. Even if you run on $500/month servers, multiply that by a 100 servers for 12 months, and you’re looking at $600k a year. Don’t be surprised if you’d end up with similar calculations (or much worse). Add to this that Hadoop doesn’t work all that well in “cloud” environments (it’s designed to run on a large number of commodity servers — virtualisation takes away some of the benefits).

If you’re thinking of replacing an expensive, traditional data warehouse that cost tens, or hundreds of millions, that may still sound like a great deal. But if you’re running an online business, and are starting something new from scratch, it’s a lot of money to earn back in advertising or sales. You might find there are plenty of productised, more out-of-the-box services you could procure for a fraction of the cost — since these are specialised companies that have already built and scaled something for you. You won’t have to care whether they used Hadoop to do it, and if they’re not delivering results, you can always switch.

Hadoop is not Real Time

From the point of view of online marketing/media/ecommerce, the biggest problem with Hadoop is that it was never designed for real-time processes. This is somewhat counter-intuitive. But while Hadoop makes huge amounts of data manageable, that doesn’t mean it’s fast. In fact, Hadoop is known for being quite slow. A Hadoop cluster is something you’d usually throw at something that can run as a job for a few days to crunch the data. Maybe every night. But a lot of the results of analysis on data that is being used in online projects has to be available right now, driving integrations and shaping environments around users.

Yes, there are various ways to “hide” that the processing is going on. And yes, there’s various ways to speed it up or even run Hadoop more or less real-time. But it’s not really what it was meant to do, and usually not really the easiest way to do it.

So why use Hadoop?

As I started out by saying, I really like Hadoop. I could easily sum up plenty of uses, but case studies are easy to find, and I didn’t set out to join the choir. When datasets become (much) too large for desktop tools, and then unwieldy in even a nicely scaled database cluster, Hadoop merits consideration. If you have a large infrastructure with tons of data, it may be a much more cost-effective alternative. And if you’re creating an online service or product that involves a lot of data crunching, it could be a great way to tackle that. But that’s not an excuse to mindlessly adopt it because “everybody is doing it”.

Should you be using Hadoop? There’s no simple yes or no to that. It’s come a long way since 2004, when it was basically just part of the Nutch webcrawler for Lucene; and now, ten years later, a sprawling ecosystem is flourishing around it. But the same business problems could be solved with, say, a NoSQL database (Cassandra, CouchDB, MongoDB, and so on); or indexing and search (Solr, ElasticSearch); or a more traditional (clustered) SQL database; or, yes, Hadoop and assorted tools; probably in combination. And in many cases, you might want to take the approach of the cooking show cliché: “usually, this would take several months, but here’s one we prepared earlier” — and get the cake ready-made, so you just have to add the icing.

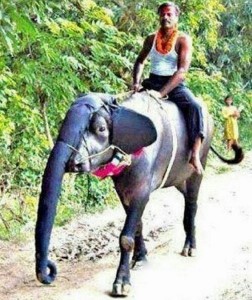

So don’t to try to tame the Hadoop elephant just because it looks so cuddly. It can pull a huge weight, but as easily crush you in a corner. It’s the real thing, and not just a toy.